Technical SEO is one of the fundamental pillars of SEO, alongside content strategy and Digital PR.

Technical SEO is the foundation of your website and acts as a base for performance growth. A strong technical foundation can accelerate growth, but a poor technical setup can hinder organic performance even if you have a strong backlink profile, content expertise, and an enticing brand.

What is a technical SEO audit?

A technical SEO audit reviews all the technical elements on your site to identify any issues that may be impacting your SEO. This can also include backend elements that only search engines will be concerned with. Once any issues are identified, a plan of action can be put together to find the solution and fix the issues.

Why is a technical SEO Audit important?

Having a good technical SEO foundation is one of the building blocks for a successful SEO strategy. If there is an issue with your site, it can prevent Google from crawling it and finding the relevant information. This could then impact your rankings. You can find potential blockers that may impact your site’s visibility by conducting an audit. Technical SEO audits are not only done to find issues but also to help you find opportunities to increase your site’s visibility.

How to perform a technical SEO audit

Step 1: Crawl your site

The first step of a technical SEO audit is to crawl the site; this can be done by tools such as Screaming Frog, Lumar and Ahrefs. These tools will mimic how search engines crawl your site and show you how they see it. These tools will then report if there are any issues with your site that could hinder your site’s performance. Different tools will show this in different ways.

When we talk about crawling, we mean the ability of a search engine to find and access your website. A search engine will follow links on your site and see the content that you have on your site.

Step 2: Check your site’s crawlability and indexability

Indexability is the ability of a search to analyse or ‘read’ the content on a page that it has crawled and add it to its index, which means the web page will show up in the search results for relevant keywords and search terms.

When doing a technical audit, it is good to start with any crawling and indexability issues, as any issues with these elements could prevent the site from being found by Google or users. A page can be crawled, but it does not mean a search engine will index it. This could be for a number of reasons. Below, we have highlighted how to identify standard crawling and indexing issues.

Robots.txt file

A robots.txt file will tell search engine crawlers which URLs they can access. Sometimes, certain page types or even the whole site can be blocked by the robots.txt file this can be on purpose, for example, if you don’t want to waste the crawl budget or if there are certain pages, such as a user accounts, that you don’t want to be crawled. For URLs not to be crawled, then a disallow rule should be applied.

However, if your site or URLs are getting blocked from being crawled when you don’t want them, you will be able to see this in your technical audit, as these URLs will not appear in your crawl. If this is the case, you will need to check that there hasn’t been a disallow rule applied to these URLs by accident or that the right robots meta tags have been added.

Similarly, there may be sets of pages that are currently being crawled which you do not want to be. A good example of this on an eCommerce website is filter parameters which can create thousands of URLs which do not change the targeting of a page. To ensure search engines are focussed on the URLs we want to rank, it is often a good idea to disallow these in robots.txt.

Robots meta tags control which URLs can be crawled

A robot meta tag is an HTML snippet that tells search engines how they should crawl the page and how it should be indexed. One of the most commonly used/misused on websites is the “noindex” meta robots. This tells search engines not to index the page it is on. There is also a “nofollow” tag which will stop search engines from crawling the links that are on a page.

Canonicals tags

A canonical tag is an HTML code that defines the primary version of a duplicate or near-duplicate page on your site. The canonical tag will tell which version of the page Google should index and be shown in the SERPs.

Canonical tags are used on duplicate pages because duplicate content on the site can lead to keyword cannibalisation. Keyword cannibalisation can lead to multiple pages on a site competing for the same rankings and harming each other’s rankings in the process.

For example, if you have these two pages on your site

You may want to implement a canonical tag to the main blog URL to prevent them from competing with each other in the SERPs.

This means that on this URL, https://thisisnovos.com/blog/news the following code would be implemented:

- <link rel=”canonical” href=”https://thisisnovos.com/blog” />

By using canonical tags correctly, you help Google index only one duplicate version and avoid any keyword cannibalisation issues.

Self-referencing canonical tags can also be used. This means a page will have a canonical tag pointing to itself.

Create an XML Sitemap and submit in Google Search Console

An XML sitemap will help Google crawl your site. It will be a crawler’s first port-a-call, and is used by search engines to determine which URLs are available to crawl on the site. An XML sitemap should be hierarchical, so this will also help search engines navigate and understand the site’s structure. An XML sitemap should only contain URLs with a 200 status code and have no redirects.

XML sitemaps can also be uploaded into Google Search Console to directly give information to Google about which URLs should be regularly crawled.

If you want to understand more about indexing, look at our ultimate guide to indexing signals in ecommerce sites.

Step 3: Analyse Internal Links and Site Structure

Information Architecture

Information architecture (IA) refers to organising, targeting, and structuring the pages on your site to make it easy for users to find and navigate. This makes it important that a category includes links to all relevant subcategory pages, and the individual subcategory pages should all include links back to the main parent page.

The level of subcategories will depend on the size of your site a small site should not have too many subcategories, as it can make it confusing for users to navigate, and a big site should not have too few subcategories as this could lead to you not missing out on targeting key terms by not have a dedicated page to target these keywords. Therefore, it’s important to look at the keyword targeting of the site. For example, does the page H1 match the search intent? If you want more information on internal linking and to understand best practices, look at our blog on how to leverage internal linking on ecommerce sites.

Hierarchy and internal links

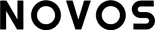

Internal links help establish the hierarchy and structure of a website. Links will create a logical flow from the homepage to other pages, indicating which pages are most important and relevant. And to help search engines understand your site structure.

This means that priority pages should be linked to from the global navigation and homepage, and links to key pages should be linked to through attribute links and breadcrumbs, and anchor text should be optimised throughout the site.

This will help Strategically guide both users and search engine crawlers to relevant content that ensures maximum visibility for targeted keywords and that SEO value is passed throughout the site. The image below shows how SEO value from the homepage is distributed throughout a site by internal linking.

Housekeeping

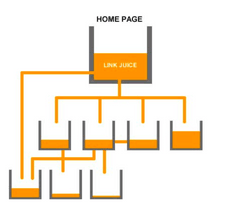

When analysing your internal links, it is important to check for any broken links that are going to a 404 page, as this can waste any link equity and cause users to have a negative experience. This includes looking at any links that might be 301 or 302 redirecting. 301 redirect is the HTTP response status code that is used for permanent redirecting. Any 301 redirects on a site should be updated so that they go straight to a 200-statute page, so you’re not wasting any SEO value.

You will also need 301 redirects, and you will need to ensure there aren’t any 302 redirects. 302 means it is a temporary redirect and will dilute any SEO value for the new pages. These are normally implemented when a product is OOS but will be coming back.

Step 4: Check your Site’s Speed and Core Web Vitals

Tools such as PageSpeed Insights and GTmetrix will tell you how long your sites take to load. Page speed is important to user experience and a ranking factor. If you have poor site speed, then these tools should highlight what action can be taken to optimise your site.

In 2020, Google announced that core web vitals (CWV). CWV are a set of metrics that measure loading performance, interactivity and visual stability. The CWV report can be seen in Google search console. The report shows the status by URL performance, for example, poor, improvement and good.

CWVs are a subset of factors that will be part of Google’s “page experience” score, which aims to improve users’ page experience and, as a result, is a ranking factor.

The CWV metrics are:

- Largest contentful paint (LCP): This measures how long it takes for the largest content element on a page to load. LCP should be less than 2.5 seconds to provide a good user experience.

- Cumulative layout shift (CLS): This measures how much a page’s layout shifts unexpectedly as it loads. CLS should be less than 0.1 for a good user experience.

- First input delay (FID): This measures how long it takes for the browser to respond to a user’s first interaction with a page, such as a click or a tap. FID should be less than 100 milliseconds for a good user experience.

- Interaction to next pain (INP): This will replace FID in March 2024 and is the time it takes for the browser to respond to a user’s next interaction with a page, such as a click or a tap after the page has finished loading. INP should be 200 milliseconds or less for a fast and responsive experience.

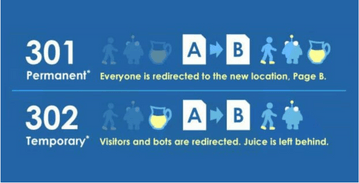

Step 5: Audit Technical On-page elements

In a technical audit, some of the easiest and quickest wins are looking at on-page elements, including title tags, canonical tags, meta descriptions, and header tags. When you crawl your site, it will flag if any of these elements are missing, too short or too long, and if there are multiple elements on a page, such as having multiple H1s, which can confuse search engines regarding what the pages are about.

As well as looking at the reports the tools will provide on the on-page elements, it is important that you review these to ensure you are targeting relevant keywords that you want to rank for. This includes on-page copy and making sure that it matches the search intent. For example, a size guide needs to have a table with all the relevant information, not an essay of text.

To find out more about on site elements take a look at our blog on what is On-Page SEO

Step 6: Audit your Schema tags

Schema markup, which is also known as structured data, is the language search engines use to read and understand the content on your page. Having this extract level of context for search engines will allow them to display rich results, like the example below:

Schema markup doesn’t necessarily improve your rankings directly. However, it can help your site to achieve rich snippets. Rich snippets can help improve click-through rates and encourage potential customers to visit your site over competitors. Common rich snippets include review stars, prices, breadcrumbs and stock availability.

An example of this can be seen below:

When doing a technical audit, you should check for any errors with your schema markup or if there is any opportunity to add any schema markups that might be missing. Tools that can be used to check your schema are:

Step 7: Review your Site’s Security

One step that is often neglected is checking the site’s security. This can be checked in your crawling tools and Google search console. These tools will tell you if your site is at risk of hacker attacks and malware attacks. Some of the most common security issues we see are:

- Lack of SSL Certificate – Websites without an SSL certificate and serving content over HTTP instead of HTTPS are flagged by search engines.

- Mixed content issues – this is when a secure page (HTTPS) includes insecure resources (HTTP).

- Redirect Chains and Loops: Incorrectly implemented redirects, especially redirect chains or loops, can be exploited by attackers and negatively impact SEO.

- Missing security headers – Security headers play a crucial role in enhancing the security posture of a web application by providing instructions to the web browser on how to handle various aspects of the page.

Step 8: Check your Sites Accessibility

Companies will spend a lot of time and money trying to make their website better for users but will then forget to make it 100% accessible to all users. This should be a key factor that people think about when they are designing and developing websites, but the accessibility factor also overlaps with SEO. Elements that are related to accessibility are ALT text (alternative text) for images, title tags, metadata, readability, Javascript and internal links. It’s important to note that there are more factors that go into making a site accessible, but the ones that have been mentioned are an excellent place to start.

Step 9: Optimise for mobile usability

Google is mobile-first, which means that Google will use your mobile site for indexing and ranking. When you crawl out a technical audit, it’s important that you set your crawl setting to crawl the mobile site viewport and ensure that your site is mobile-friendly and responsive. As most common CMSs are mobile-friendly by default, this shouldn’t be an issue but is something to consider if you are working with a custom-built CMS.

Step 10: Prioritises your results

Now that the hard part is done and you have a list of all the tech tasks you need to tackle, you will need to figure out how to prioritise them.

Quick wins and smaller tasks that are easy to implement are often done first but may not impact your SEO the most. Using the ICE framework is a good way to prioritise your tech tasks. The ICE framework is a method of scoring development tickets in order to determine prioritisation. It scores any technical SEO ticket on three factors, impact, confidence, and effort.

The overall ICE prioritisation score is calculated by (Impact x Confidence) / Effort (based on the scoring system below). Each factor is marked out of 5.

An example of this can be seen below:

Technical ticket: Fix multiple H1s across the site

- Impact – 4

- Confidence – 4

- Effort – 2

ICE score – (4×4)/2 = 8

By doing this for each tech ticket you have identified, you will be able to see which tech ticket will have the biggest impact on your site and help you to prioritise them.

To understand in more detail how you can prioritise your tech ticket, take a look at our blog on how to use the ICE framework to prioritise technical tickets for SEO.

To conclude

A technical SEO audit should ensure your site is technically sound and you are not missing out on any potential opportunities to improve your site. This will help with your site’s visibility and overall user experience.

Regular audits can help businesses stay ahead of Google algorithm updates and maintain online performance.

If you need help with your site’s technical SEO, feel free to get in touch with us.